Creating a Scraper for Multiple URLs, Simple Method

Important Note: The tutorials you will find on this blog may become outdated with new versions of the program. We have now added a series of built-in tutorials in the application which are accessible from the Help menu.

You should run these to discover the Hub.

This tutorial was created using version 0.8.2. The Scraper Editor interface has changed a long time ago. Many more features were included and some controls now have a new name. The following can still be a good complement to get acquainted with scrapers. The Sraper Editor can now be found in the ‘Scrapers’ view instead of ‘Source’ but the principle remains funamentally the same.

Now that we’ve learned how to create a scraper for a single URL, let’s try something a little more advanced. In this lesson we’ll learn how to create a scraper which can be applied to a whole list of URLs using a simple method suited for beginners. In the next lesson a more complex scraper utilizing regular expressions will be demonstrated for our tech savvy users. Geeks, feel free to skip to: Creating a Scraper for Multiple URLs using Regular Expressions.

Recap: For complex web pages or specific needs, when the automatic data extraction functions (table, list, guess) don’t provide you with exactly what you are looking for, you can extract data manually by creating your own scraper. Scrapers will be saved on your computer then can be reapplied or shared with other users, as desired.

2. Choose your source. Today let’s use: http://en.wikipedia.org/wiki/Leading_firms_by_activity

Make sure this address is displayed in the address bar. In the Page view, you will see a list of leading firms by activity. Today we will make a report detailing the company information for each of these firms.

Traditionally, you’d have to click on each link, then copy and paste the information into an excel spreadsheet, but with the scraper function we’re going to save a lot of time and energy.

3. Choose the information you want to extract and create your scraper:

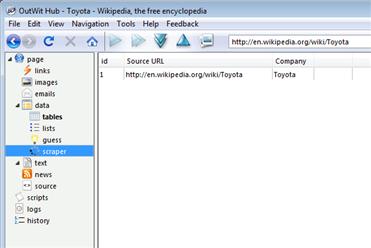

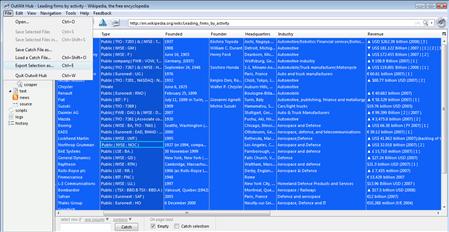

If you click on the List view you can see all the URLs and their related companies:

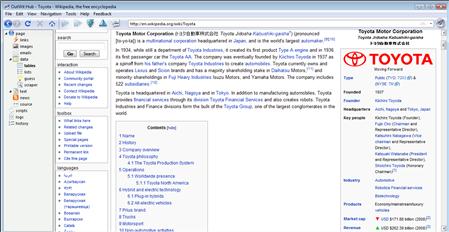

Select a random company to get started, say Toyota. Double-click on the link for Toyota and you will be redirected to the Toyota article in the Page view. On the right, there is a box with the company information and logo. This is what we’ll use to populate our spreadsheet for each company contained in the original list.

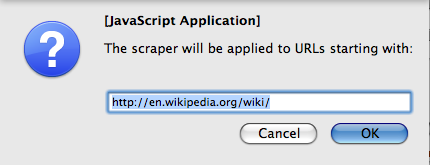

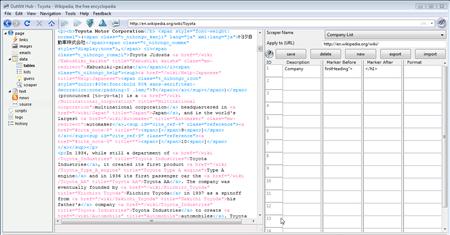

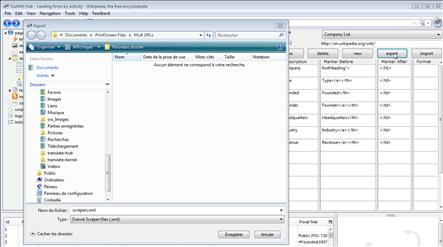

Click Source on the left-hand side view list to open the scraper editor. Then click new and the following box will appear. (If you have already created a scraper you will see the information populated in the right.)

You will be asked to enter a URL that satisfies: “scraper will be applied to URLs starting with.” For this example use: http://en.wikipedia.org/wiki/ since all the company’s URLs will begin with this address. (Unfortunately, in the case of wikipedia, all articles start with the same string.) You will then be asked to name the scraper and click OK.

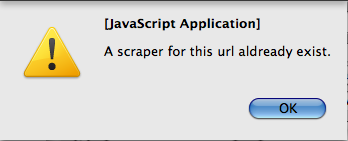

If you have already created a scraper for this URL you will see the following error:

You can create multiple scrapers for the same URL, but you can only have one loaded at a time in OutWit Hub. In order to create a new scraper for the same URL you will have to click the Delete button and then create your new scraper. If you do click delete, however, all of your information will be lost unless you have exported the scraper. At the end of this tutorial you will learn how to export/import scrapers.

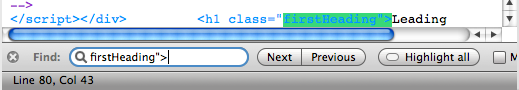

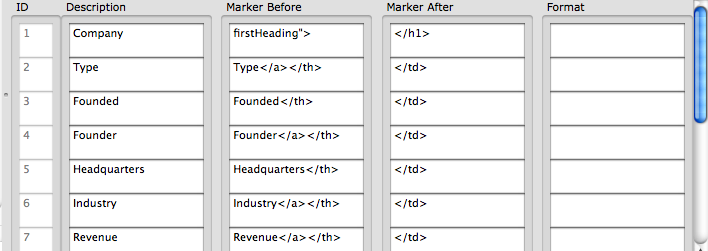

The first column will be named Company, so enter that in the description field. In the Source Code Box you can see Toyota in black, which is the value that we want to populate this field. The text strings in black are the values that appear on the website and when we use the scraper they are the only values that will appear in our results. We need to take the most logical markers both preceding and following Toyota. Now there is no exact way to say how much of the text before or after the value you’ll need to use. You want the Marker Before to be specific and not be repeated in the rest of the document. This will ensure that you will get the correct value when applying the scraper to multiple websites. In our example, firstHeading”> is quite specific so copy & paste that into the Marker Before field. If the Marker Before is unique then the Marker After can be less specific. </h1> will do the trick.

To be absolutely sure the marker is unique, you can use the find command to search the document for the number of instances of the value firstHeading”>. (If Cmd-F/Ctrl-F do not open the Find Box click in the source code box to give it the focus and try again)

Now lets see if this works. Press Save, then click Scraper in the view list, then Refresh ![]() . As you can see, we are good to go so lets move on.

. As you can see, we are good to go so lets move on.

Try to see if you can complete the next few fields by yourself. The column names will be: Type, Founded, Founder, Headquarters, Industry, and Revenue. Remember you should check your work periodically by clicking: Save-Scraper-Refresh.

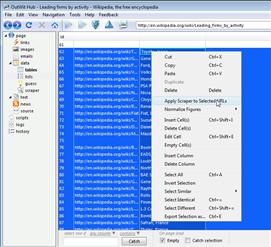

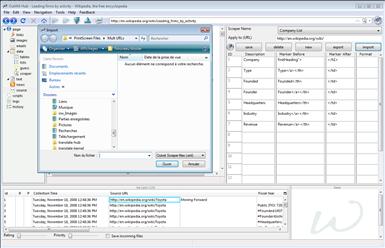

We have successfully created our scraper, but now we need to apply it to all the URLs from our list. In the Page view, reload our initial site: http://en.wikipedia.org/wiki/Leading_firms_by_activity. Then select the List view, highlight the links for the companies, right click, and select “Apply Scraper to Selected URLs.”

In the List view select only the values that relate to the links for the companies. In this case it would be rows 62-199.

Now we can go to the Scraper view and see our list loading.

4. Export the data:

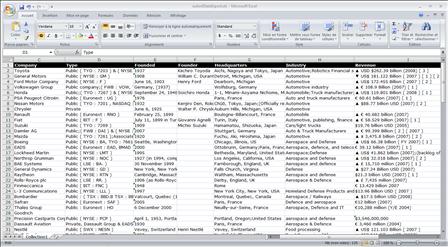

To save the results in a spreadsheet, select all (Ctrl-A or Cmd-A). Then Select File and “Export Selection As…” Finally, chose a name, designation for your file, and click Save.

You can see that OutWit Hub has successfully created our spreadsheet.

Notice that in the results there are unwanted characters: (▼[1] [2] [3] [4]). In the more advanced tutorial, we will learn how to remove the unwanted characters using RegExp. Click here to skip to the next tutorial.

We have one last and very important step to complete. Return to the Source view and click Export. Then chose a name and designation for your file.

It is very important that you export your scraper if you plan to use it again or to create additional scrapers for the same URL. Remember that when we were creating our scraper we applied it to the URL: http://en.wikipedia.org/wiki/. In the future, if you create another scraper for Wikipedia you will also have to use this URL. If you were to do so, the new scraper would replace the existing one and all your work would be lost. By exporting and then importing your work, you can create many scrapers for the same URL.

To reload a scraper click Import, select your file, then Open.

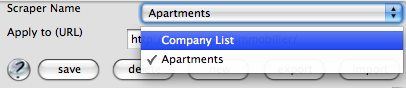

Occasionally after importing your scraper, it may not appear. You will then have to select it from the drop down box where the Scraper Name is located.

This completes our tutorial, and, as always, we welcome any questions or feedback. We couldn’t do this without you.

by jcc

Tags: Mult URLs, scraper, Web Harvester

December 27th, 2008 at 11:38 pm

Love this tool! Just fantastic. My first scraper seems to be working great, but I need to add many many more than the 15 available data fields. Is there a way to add more?

January 7th, 2009 at 6:30 pm

In the last update the number of fields was changed from 15 to 20. Thank you for the compliment!

March 31st, 2009 at 3:19 pm

This is a great tool. However, i couldn’t figure out how to upload multiple pages info. Example, 1st page contain 1 thru 75 of 378 records, 2nd page contain anther 75 records and so for. is there a way to view it all at once in table, guess or text and creating a scraper. Please advise.

Many thanks

March 31st, 2009 at 4:41 pm

If you uncheck ‘Empty’ in the table, guess, or scraper view, the information from the previous page(s) will remain in the view panel. You can use either ‘Next in Series’ or ‘Auto-Browse’ to navigate through the pages to load all of the information.

April 12th, 2009 at 12:19 am

can i download the informations of tables on several websites (700+) when

1) i have the weblinks or

2) i do not have the complete weblinks but know the folder of the websites.

thanks.

May 7th, 2009 at 2:43 pm

Tom, if you have the weblinks, just put them in a text or html file and open it with the hub. Then you can check “catch selection” in the table view and browse through these urls.

I don’t really understand what do you mean by “the folder of the websites”.

May 16th, 2009 at 11:42 pm

Hi,

From the previous “pr” post, what do you, mean exactly by “Open it with the hub” ? I did “File/Open” and opened a txt file from my hard disk where I saved 269 weblinks (URL) but nothing could be seen in table view… My concern is the same than Tom’s I would be happy to extract datas from these stored URL. A small Tutorial would be welcome.

Outwit seems very promising.

thanks

May 18th, 2009 at 8:44 am

@flyingfish: Yes, you can open the test file with “file->open”. Then, go to the “links” view, you should see your links. Go to the “table” view, check “catch selection” on the bottom panel. Go back to “links” view, select all URLs, right click, and click on “browse selected URLs”.

If you have any problems with this, please use the bug report form (“Feedback” menu in outwit hub).

July 23rd, 2009 at 2:25 am

Nice article, tnx

July 28th, 2009 at 4:51 pm

How soon before we can import a list of URLs from txt or excel? I like the generate sequence feature but it’s only creating 15 URL’s as the other commenter said they need to be able to add a lot more and I second that. It would be great to have either an import option or have it generate as many as we need (…) Please let me know about future updates and what you plan to include with them.

Thanks

Dimi

July 28th, 2009 at 10:59 pm

Hello Dimitri,

It’s done! You can now open a txt file in the Hub and work with urls inside this file.

As for the sequence generation, we will soon release an OutWit Hub Pro with a number of new features and no limitations.

August 9th, 2009 at 10:21 am

Instead of working of off a list of links, can you add the ability to choose a URL and then OutWit checks all websites 2 or 3 levels deep?

In other words, I can’t generate a list of 1500 companes but I know where the URL is and that the pages are “under” that starting URL.

August 13th, 2009 at 1:51 am

Outwit is a very promising product and is mostly doing what a friend needs, but I have found what appears to be a bug. I created a custom scraper to apply to a bunch of url’s that I catch using a regexp in the table view. Then just right clicking on the list applies the scraper to the thinks. Works great. The problem comes when I try to export the scraper and import it on another machine. It seems to import just fine but the scraper is never actually applied to the url’s. If I manually re-create the scraper on the new machine, then it works just as before. It appears that somehow the association of the scraper with the url isn’t happening properly. Can you take a look at the import function?

thanks,

-jason

August 14th, 2009 at 10:59 am

Hi Jason,

Thanks for your report.

Could you please give us more information about the configurations of the two machines? Are they running the same version of OutWit?

This seem to be a bug, because we import and export scraper between us without problems.

Regards,

pr

August 14th, 2009 at 11:02 am

Hi Roderick,

If I understand well, the feature you want is named “dig” in OutWit Hub. Just load the start page and click on the two blue down arrows.

Regards,

pr

January 23rd, 2010 at 12:08 am

I am trying to set up a test where you can feed mutliple urls in a text file. I have set up a scraper to collect about 20 fields from a website and there are hundreds of pages in that same format. When I try to extract the data, it stops after the first url and only gives me that information. What am I doing wrong?

Thanks,

Mark

January 24th, 2010 at 6:30 pm

Hi Mark, didn’t you put the complete URL instead of just a part of it? Say you have to scrape pages 1 through 50 of myWebSite. Then you do not wand to put http://www.myWebSite.com/Page1 as the URL to apply the scraper to, but only http://www.myWebSite.com/Page , for instance.

February 24th, 2010 at 2:41 am

Thanks for the article and your continued responses to questions. I think I must be missing something simple. The scraper does exactly what I want it to do except for one thing: I’m at a loss as to how to relate it back to the scraped url. When I look in “Scraped” I see all of the information I need except for the url that was scraped. When I export to both the complete selection of “Scraped” and “Links” for the text file I’m using to .xls the information looks right overall, but is not in the same order. How do I relate the two? Thanks in advance.

February 24th, 2010 at 2:48 pm

CC,

This is another problem due to the lack of documentation, sorry (we are working on it… slowly but we are): By default, the first column ‘URL Source’ is hidden in all views. You can unhide it by clicking on the column picker button on the top right corner of the scraped datasheet. This should be the info you miss, in order to map the data you extract.

Another thing: for these messages, please use the feedback form of our website. Each message generates a ticket, and this allows us to followup on problems more easily.

Cheers,

JC

May 12th, 2010 at 8:08 am

Great job guys. Very helpful. I look forward to the PRO version!

June 3rd, 2010 at 2:20 am

Hi there,

I just downloaded Outwit Hub and it is absolutely superb. The best tool that I have ever found on the internet!

One quick question – I am scraping a load of pages from a site and there are two possible formats for the pages, so I need two scrapers. Is there any way of telling the program to use one scraper if the other doesn’t work in a certain case? If you could build in some sort of IF functionality that would be cool.

ie if scraper 1 had a telephone number extractor and 2 had a Skype number extractor then you could have a condition so that :

IF there is a phone number then Scraper 1. If not, then use scraper 2.

Superb work chaps and I hope it makes you rich as you deserve it for the amount of hours that you are saving the world f computer users!!

Andy

June 3rd, 2010 at 7:15 pm

Thanks a lot Andy 🙂

This is absolutely the kind of intelligence we want to add with the scripts. For now, as you will see in the Hub Pro Beta, macros will allow you to apply extractors automatically, then, with the scripting engine and enhanced scrapers you’ll pretty much have the controle you need for all that.

Note: Please use the feedback link on our site for bug reports, suggestions, ideas and to sign in for the beta testing of OutWit Hub Pro.